Beyond the Buzzword: Building MuseMatch, a Truly AI-Native Game

How NYX Studio Labs developed a trivia game where artificial intelligence is not just a feature, but the foundation of its creation, content, and quality control.

Introduction: The Spark of an Idea

What if a game could tap into the vast, shared consciousness of pop culture? What if every puzzle felt like a unique piece of art, a dreamlike interpretation of a song you love? This was the founding idea behind MuseMatch, our new music trivia game. But the real question wasn't what to build, but how.

From its inception, MuseMatch was designed to be AI-native. This doesn't just mean using AI to generate a few images. It means weaving artificial intelligence into the very fabric of the application—from the code that runs it to the content that fills it, and even the process that validates it. This is the story of how we built not just a game, but a new kind of creative engine.

The Architecture - An AI Development Partner

Building a modern, scalable application is a monumental task. For MuseMatch, we treated our AI assistant, Gemini 2.5 Pro, as a core development partner. The entire web app, built on a modern React Native + Firebase architecture, was co-developed with AI. In the end, Cursor AI was instrumental in being able to load in the entire codebase and help debug and extend feature with context of the entire ecosystem. Llama4 was also used for some of our LLM tasks, while Gemma3 provided some of the peer AI model validation.

AI co-development went far beyond simple code completion. We engaged in a collaborative dialogue, brainstorming architectural solutions, debugging complex functions, and optimizing the database structure. This approach allowed us to build and iterate on the application at a speed that would have been impossible otherwise, proving that AI can be a powerful force multiplier for technical founders and small teams. The result is a robust and scalable platform, ready for future expansions into new categories like movies and books.

The Content Engine - A Human-AI Partnership

The heart of MuseMatch is its ever-growing library of puzzles. Manually creating thousands of unique images and fact-checking countless hints would be an insurmountable task. This is where our bespoke, three-phase content pipeline comes in—a workflow designed to blend the scale of AI with the nuance of a human creative director.

Phase 1: The Spark (Concept & Scaffolding) Every puzzle pack begins with a conversation. The Creative Director (human) and the AI Assistant work together to define a theme, like "Classic Hits." The AI then acts as an incredibly knowledgeable research assistant, generating a master list of 100 thematically appropriate songs. The human director then curates this list, ensuring it aligns with the artistic vision, creating the foundational blueprint for the entire pack.

Phase 2: The Craft (Puzzle Creation) This is where the human-in-the-loop process truly shines. For each song, the AI generates several distinct, well-researched creative prompts for the puzzle's image. The human director selects the best concept and then uses their own external tools (like FLUX and ComfyUI) to generate and perfect the final image, maintaining full artistic control.

Once the image is approved, the AI generates a list of candidate hints across three difficulty levels, all fact-checked and adhering to our content guidelines. The human director then makes the final selection, choosing the three hints that best complement the art and create a fair, logical path to the solution.

Phase 3: The Gauntlet (AI-Peer Validation) This is perhaps the most forward-thinking part of our process. How do we ensure a puzzle is truly solvable? We ask another AI.

We developed a "blind" playthrough simulation where a separate, multimodal AI model is given the final image and the curated hints. Its task is to analyze the visual and textual clues and attempt to guess the correct answer. This "AI-peer validation" provides an unbiased report on the puzzle's logical integrity. It's a new form of quality control, one that uses the analytical power of one model to check the creative output of another, all before the final human "GO / NO-GO" decision is made.

The Admin App - Empowering the Human Curator

(VISUALS): A clean screenshot of the MuseMatch Admin App interface. It should look professional and functional, perhaps showing a list of puzzles or an editing screen.

To ensure the human remains firmly in the director's chair, we developed a dedicated MuseMatch Admin App. This powerful tool completely decouples the day-to-day content management from the core application development. It's the ultimate expression of our human-in-the-loop philosophy.

Through the Admin App, a human curator can manually intervene at any stage. They can add new puzzles from scratch, edit AI-generated hints for tone or clarity, and even replace an AI-generated image with a hand-picked alternative to craft a truly special, bespoke puzzle. This provides a vital layer of quality control and allows for a level of artistic nuance that a fully automated system could never achieve on its own. It ensures that while the AI provides the scale, the final product always reflects a human touch.

The Social Media Engine

A key feature of the Admin App is its ability to act as a marketing content generator. With a single click, the app can take a completed puzzle—the image, the curated hints, and the answer—and package it into ready-to-post formats for social media. It can generate assets for Instagram carousels, frames for TikTok videos, and copy for posts, all pre-formatted and ready to go. This turns every puzzle created for the game into a potential marketing asset, creating a seamless and incredibly efficient pipeline from content creation to audience engagement.

The Feedback Loop - Data-Driven Curation

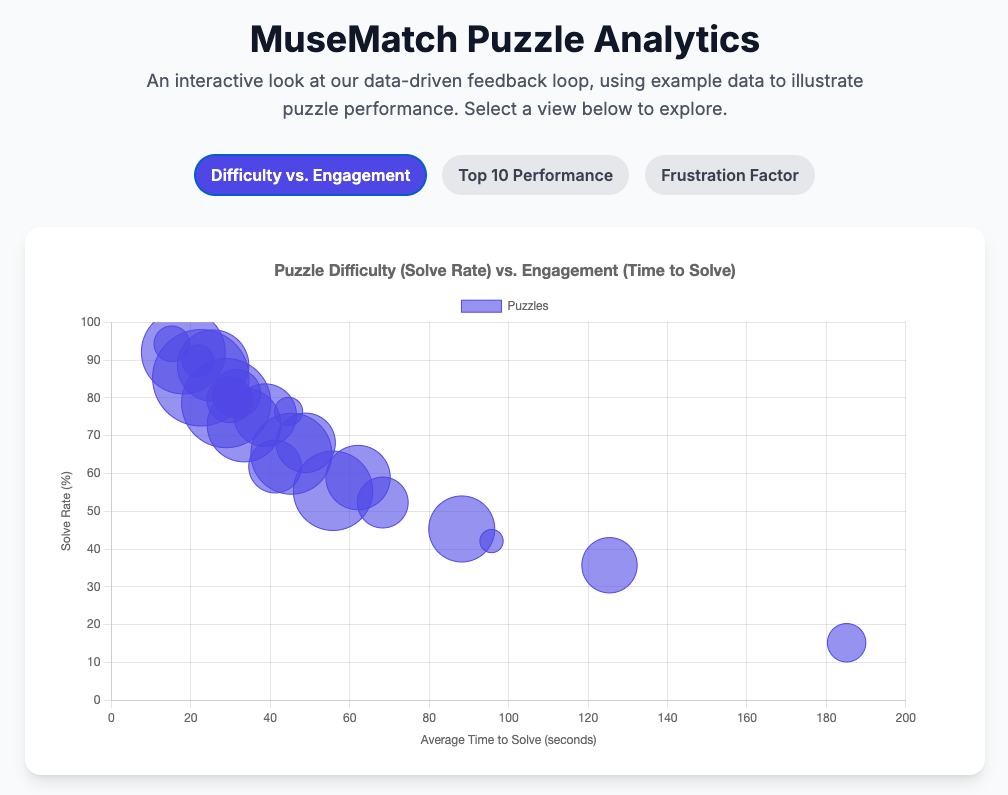

Example MuseMatch Analytics for the top performing puzzles

Example MuseMatch Analytics showing difficulty vs engagement

A great game isn't just about great content; it's about a great experience. To ensure our puzzles are the perfect balance of challenging and fair, we built a robust analytics framework. This isn't just about tracking scores; it's about creating a data-driven feedback loop that continuously improves the game.

We evaluate every puzzle against key performance metrics:

Solve Rate: The primary indicator of difficulty. What percentage of players successfully solve a given puzzle?

Hints Used: On average, how many hints do players need? A puzzle that consistently requires all three hints might be too obscure or its art too abstract.

Time to Solve: This metric helps us differentiate between an easy puzzle and one that is enjoyably challenging.

This data is fed back into our content strategy, allowing us to refine our AI prompts and hint generation process. It's a self-improving system where real player engagement informs our creative direction. This analytical rigor is how we build not just fun games, but engaging, optimized digital experiences—a core capability of NYX Studio Labs.